Following the section on LCS, this section covers a second actively developing approach to evolving rule-based systems. We will see that the two areas overlap considerably and that the distinction between them is somewhat arbitrary. Nonetheless the two communities and their literatures are somewhat disjoint.

Fuzzy Logic is a major paradigm in soft computing which provides a means of approximate reasoning not found in traditional crisp logic. Genetic Fuzzy Systems (GFS) apply evolution to fuzzy learning systems in various ways: GAs, GP and Evolution Strategies have all been used. We will cover a particular form of GFS called genetic Fuzzy Rule-Based Systems (FRBS), which are also known as Learning Fuzzy Classifier Systems (LFCS) [25] or referred to as e.g. ``genetic learning of fuzzy rules'' and (for Reinforcement Learning tasks) ``fuzzy Q-learning''. Like other LCS, FRBS evolve if-then rules but in FRBS the rules are fuzzy. Most systems are Pittsburgh but there are many Michigan examples [283,284,104,25,218,65,223]. In addition to FRBS we briefly cover genetic fuzzy NNs but we do not cover genetic fuzzy clustering (see [74]).

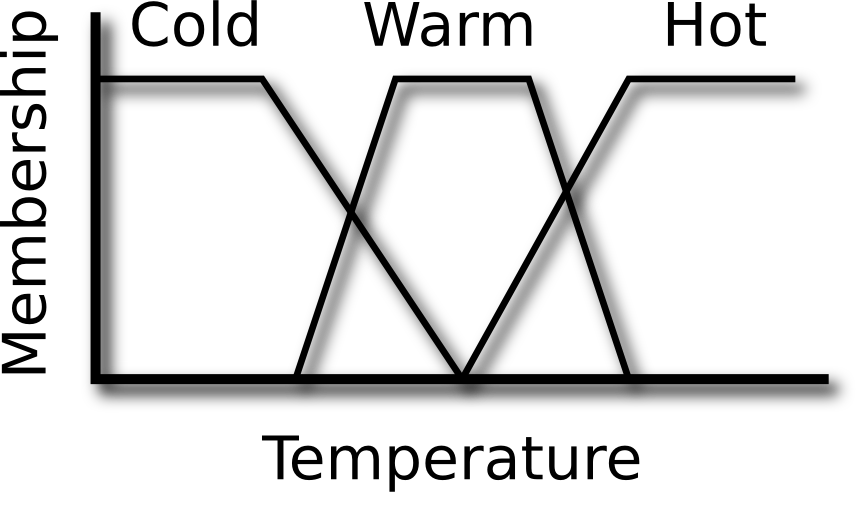

In the terminology of fuzzy logic, ordinary scalar values are called

crisp values. A membership function defines the degree of

match between crisp values and a set of fuzzy linguistic terms. The set of terms is a fuzzy set. The following figure

shows a membership function for the set ![]() cold, warm, hot

cold, warm, hot![]() .

.

Each crisp value matches each term to some degree in the

interval [0,1], so, for example, a membership function might define

5![]() as 0.8 cold, 0.3 warm and 0.0 hot. The process of computing

the membership of each term is called fuzzification and can be

considered a form of discretisation. Conversely, defuzzification

refers to computing a crisp value from fuzzy values.

as 0.8 cold, 0.3 warm and 0.0 hot. The process of computing

the membership of each term is called fuzzification and can be

considered a form of discretisation. Conversely, defuzzification

refers to computing a crisp value from fuzzy values.

Fuzzy rules are condition/action (IF-THEN) rules composed of a set of linguistic variables (e.g. temperature, humidity) which can each take on linguistic terms (e.g. cold, warm, hot). For example:

As illustrated in figure 12 (adapted from [123]), a fuzzy rule-based system consists of:

We distinguish i) genetic tuning and ii) genetic learning of DB, RB or inference engine parameters.

It is also possible to learn components simultaneously which may produce better results though the larger search space makes it slower and more difficult than adapting components independently. As examples, [209] learns the DB and RB simultaneously while [129] simultaneously learns KB components and inference engine parameters.

Recently [242] claimed that all existing GFS have been applied to crisp data and that with such data the benefits of GFS compared to other learning methods are limited to linguistic interpretability. However, GFS has the potential to outperform other methods on fuzzy data and they identify three cases ([242] p. 558):

They argue GFS should use fuzzy fitness functions in such cases to deal directly with the uncertainty in the data and propose such systems as a new class of GFS to add to the taxonomy of [123].

A Neuro-Fuzzy System (NFS) or Fuzzy Neural Network (FNN) is any combination of fuzzy logic and neural networks. Among the many examples of such systems, [188] uses a GA to minimise the error of the NN, [116] uses both a GA and backpropagation to minimise error, [229] optimises a fuzzy expert system using a GA and NN, and [209] uses a NN to approximate the fitness function for a GA which adapts membership functions and control rules. See [74] for an introduction to NFS, [189] for a review of EAs, NNs and fuzzy logic from the perspective of intelligent control, and [120] for a discussion of combining the three. [150] introduces Fuzzy All-permutations Rule-Bases (FARBs) which are mathematically equivalent to NNs.

Herrera [123] p. 38 lists the following active areas within GFS:

Herrera also lists (p. 42) current issues for GFS: