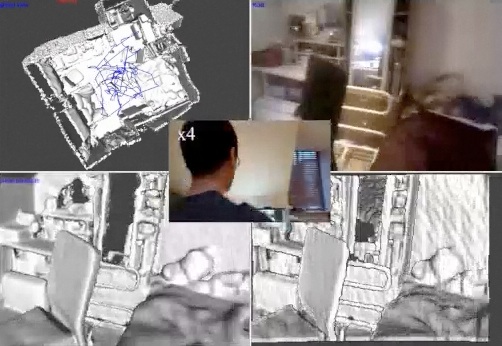

I am Professor of Computer Vision at the University of Bristol in the Department of Computer Science and a member of the Visual Information Laboratory (VIL) and the Bristol Robotics Laboratory (BRL). My research covers computer vision and its applications - robotics, wearable computing and augmented reality - and I have done a lot of work on 3-D tracking and scene reconstruction, mainly in simultaneous localisation and mapping (SLAM). Working with industry and on interdisciplinary projects are a high priority - please get in touch if you are interested in working with me. More details can be found below and in my publications.

I am Professor of Computer Vision at the University of Bristol in the Department of Computer Science and a member of the Visual Information Laboratory (VIL) and the Bristol Robotics Laboratory (BRL). My research covers computer vision and its applications - robotics, wearable computing and augmented reality - and I have done a lot of work on 3-D tracking and scene reconstruction, mainly in simultaneous localisation and mapping (SLAM). Working with industry and on interdisciplinary projects are a high priority - please get in touch if you are interested in working with me. More details can be found below and in my publications.

Contact details: Department of Computer Science, University of Bristol, Merchant Venturers Building, Woodland Road, Bristol BS8 1UB, UK; T: +44 117 9545149; E: andrew at cs dot bris dot ac dot uk.

News

- Mar 22: Post-doc position available in Robot Vision for Creative Technology (2 years) - great oportunity to be part of MyWorld, a £46M programme to drive creative technology in the West of England. Research intensive, involving 3-D tracking, visual SLAM, etc. Further details. Deadline: 24th April 2022.

- Jan 22: Pleased to start work as a CPRB Editor for IROS 2022.

- Oct 21: New paper on great work by Tom Bale on evaluating effectiveness of AR for interactive guidance in hazardous environments presented at Mobile-HCI 2021. Evaluating Prototype Augmented and Adaptive guidance system to support Industrial Plant Maintenance.

- May 21: New paper on object based relocalisation in SLAM presented at IROS 2021, with Yuhang Ming and Xingrui Yang. Object-Augmented RGB-D SLAM for Wide-Disparity Relocalisation.

- May 21: New paper extending our ECCV "image-to-map" geolocation work using embedded spaces to aerial localisation presented at ICRA 2021, with Obed Samano Abonce and Mengjie Zhou. Global Aerial Localisation Using Image and Map Embeddings.

- May 21: New paper extending our ECCV "image-to-map" geolocation work using embedded spaces to aerial localisation presented at ICRA 2021, with Obed Samano Abonce and Mengjie Zhou. Global Aerial Localisation Using Image and Map Embeddings.

- Mar 21: New Research Associate post available, working with Perceptual Robotics and DNV-GL on our recently awarded Innovate UK project, looking at using vision-based localisation of drones for wind turbine inspection. Further details. Closing date for applications: 27 May 2021.

- Aug 20: New paper on geolocation of images without GPS by learning an embedded space for map tiles and images presented at ECCV 2020, with Obed Samano Abonce and Mengjie Zhou. You Are Here: Geolocation by Embedding Maps and Images.

- Jan 20: Please to be a member of the Advisory and Steering Groups for the Bristol VR Lab as it moves into its next stage of development.

- Jun 19: Our paper on simultaneous tracking and model parameter estimation for drone localisation wrt wind turbines has been accepted for presentation at IROS 2019 in Macau. [arXiv version].

- May 19: New paper with colleagues from Earth Sciences and Aerospace on automated 3-D reconstruction of volcanic plumes using ground based cameras.

- May 19: Congratulations to Pilailuck Panphattarasap for passing her PhD viva for her excellent work on automated map reading (see below).

- Mar 19: New paper with Oliver Moolan-Feroze and collaborators at Perceptual Robotics on simultaneous tracking and model parameter estimation for drone localisation wrt wind turbines [arXiv version].

- Jan 19: New paper on drone localisation for wind turbine inspection using model-based tracking accepted for ICRA 2019. [arXiv version].

Research Assistants and Students

I enjoy working with people who want to discover, innovate and work with others to make things happen. If you are interested in working with me then please get in touch. If you want to do a PhD then I'd be happy to hear from you but please take a look at what I do and how we might work together before you contact me. If you are looking for funding, then any vacancies I have will be advertised on this page; otherwise, you may like to consider the various scholarships offered by the University.

Projects

|

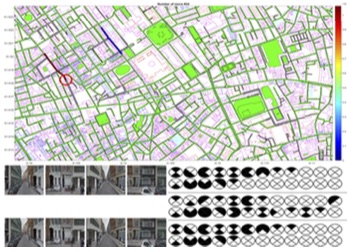

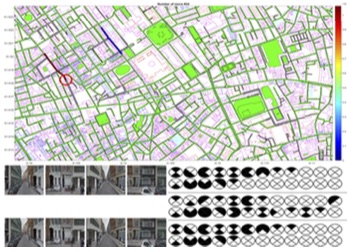

AUTOMATED MAP READING USING SEMANTIC FEATURES

New research on localising images in 2-D cartographic maps by linking semantic information, akin to human map reading. Based on minimal binary route patterns indicating presence or absence of semantic features and trained networks to detect such features in images. Leads to highly scalable map representations.

[IROS 2018 paper][Project page]

|

|

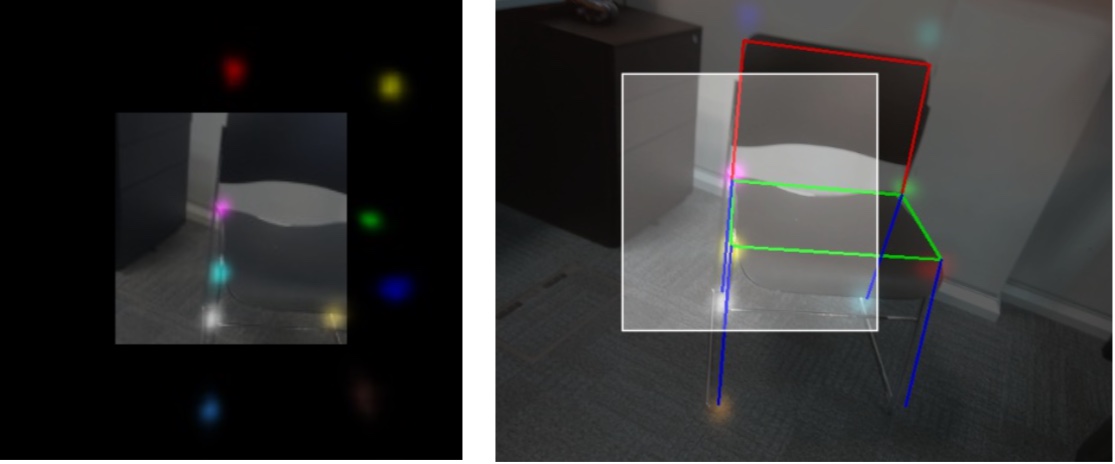

OUT-OF-VIEW MODEL-BASED TRACKING

3-D model-based tracking which uses a trained network to predict out-of-view feature points, allowing tracking when only partial views of an object are available. Designed to deal with tracking scenarios involving large objects and close view camera motion.

[IROS 2018 paper]

|

|

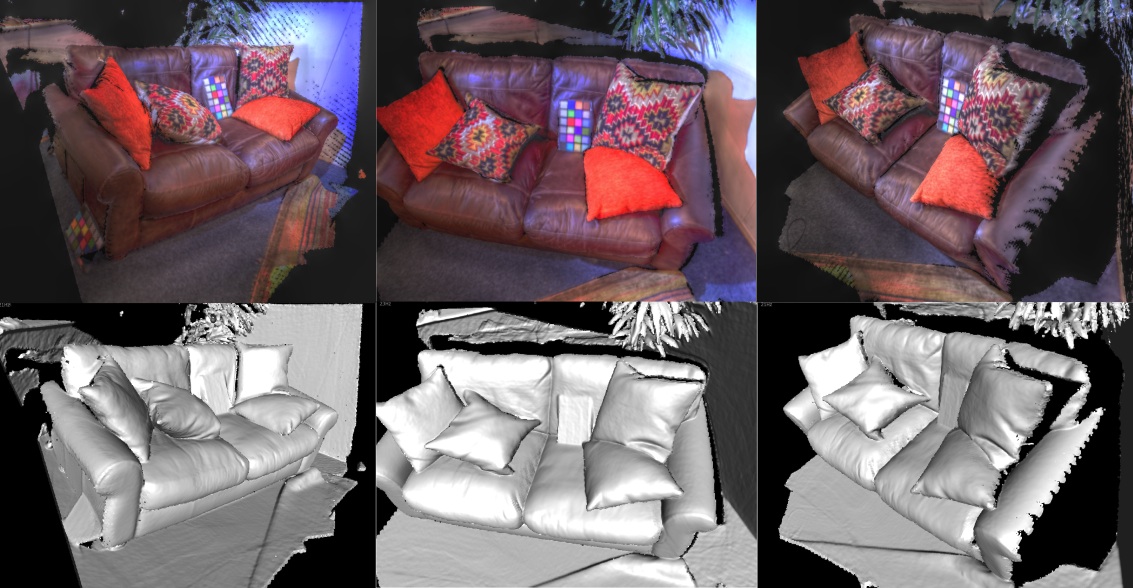

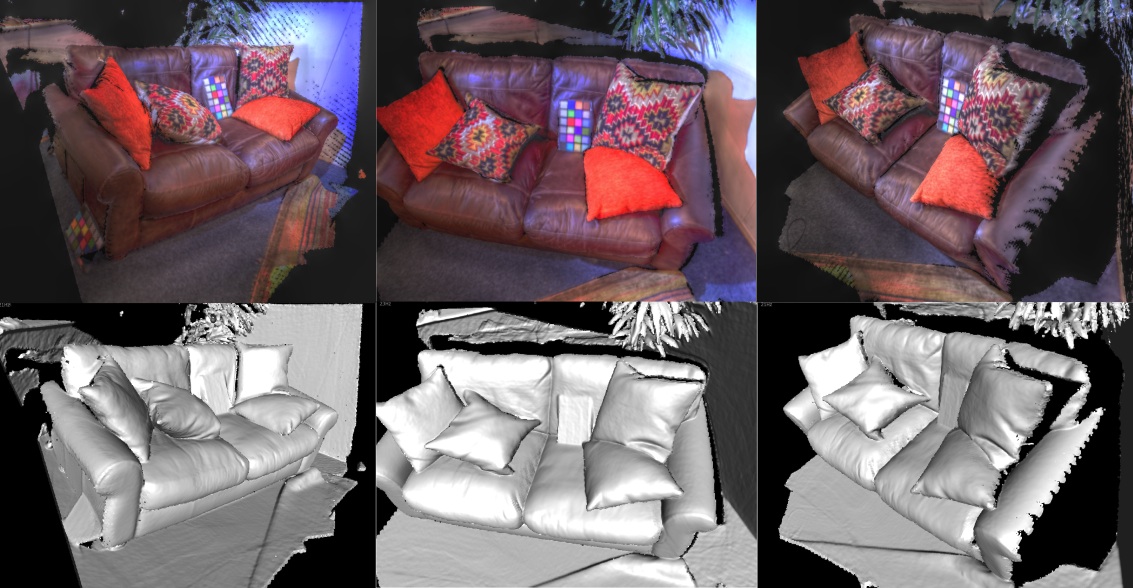

HDRFUSION: RGB-D SLAM WITH AUTO EXPOSURE

RGB-D SLAM system which is robust to appearance changes caused by RGB auto exposure and is able to fuse multiple exposure frames to build HDR scene reconstructions. Results demonstrate high tracking reliability and reconstructions with far greater dynamic range of luminosity.

[3DV 2016 paper][Project page]

|

|

LDD PLACE RECOGNITION

Place recognition using landmark distribution descriptors (LDD) which encode the spatial organisation of salient landmarks detected using edge boxes and represented using CNN features. Results demonstrate high accuracy for highly disparate views in urban environments.

[ACCV 2016 paper][Project page]

|

|

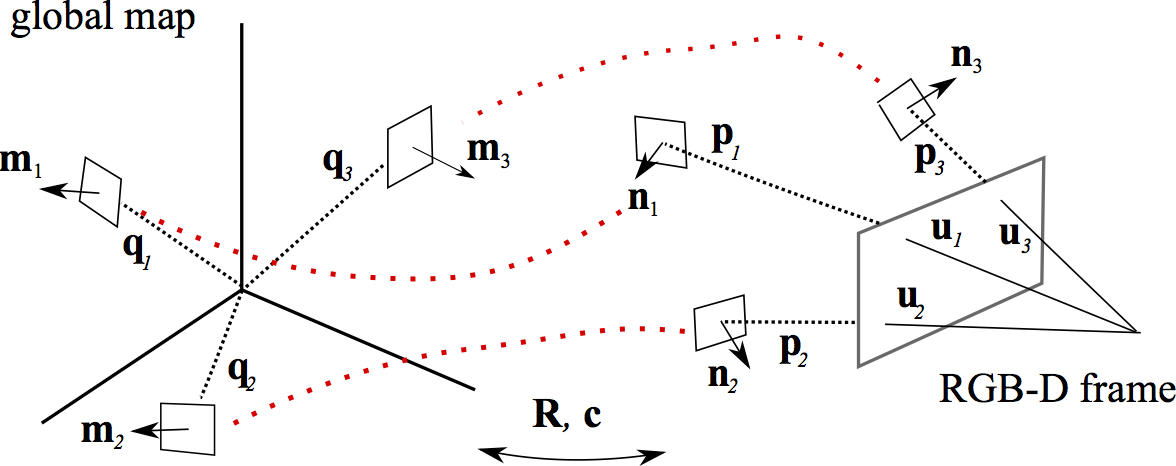

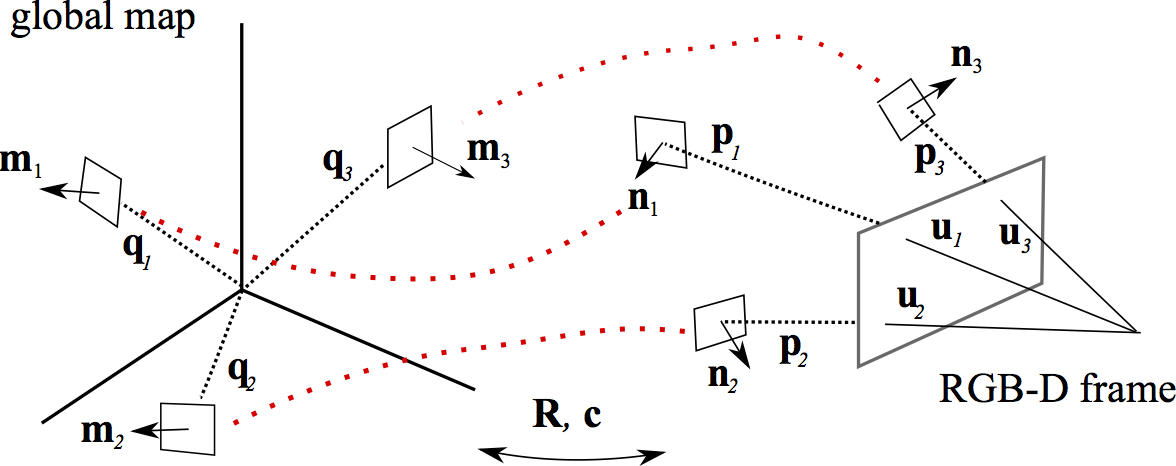

MULTI-CORRESPONDENCE 3-D POSE ESTIMATION

Novel algorithm for estimating the 3-D pose of an RGB-D sensor which uses multiple forms of correspondence - 2-D, 3-D and surface normals - to gain improved performance in terms of accuracy and robustness. Results demonstrate significant improvement over existing algorithms.

[ICRA 2016 paper][Project page]

|

|

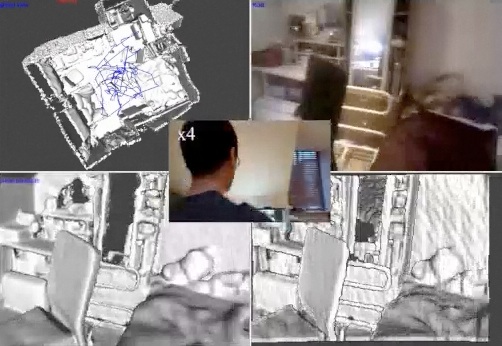

RGB-D RELOCALISATION USING PAIRWISE GEOMETRY

fast and robust relocalisation in an RGB-D SLAM system based on pairwise 3-D geometry of key points encoded within a graph type structure combined with efficient key point representation based on octree representation. results demonstrate that the relocalisation out performs that of other approaches.

[ICRA 2015 paper][Project page]

|

I am Professor of Computer Vision at the University of Bristol in the Department of Computer Science and a member of the Visual Information Laboratory (VIL) and the Bristol Robotics Laboratory (BRL). My research covers computer vision and its applications - robotics, wearable computing and augmented reality - and I have done a lot of work on 3-D tracking and scene reconstruction, mainly in simultaneous localisation and mapping (SLAM). Working with industry and on interdisciplinary projects are a high priority - please get in touch if you are interested in working with me. More details can be found below and in my publications.

I am Professor of Computer Vision at the University of Bristol in the Department of Computer Science and a member of the Visual Information Laboratory (VIL) and the Bristol Robotics Laboratory (BRL). My research covers computer vision and its applications - robotics, wearable computing and augmented reality - and I have done a lot of work on 3-D tracking and scene reconstruction, mainly in simultaneous localisation and mapping (SLAM). Working with industry and on interdisciplinary projects are a high priority - please get in touch if you are interested in working with me. More details can be found below and in my publications.