'Out-of-View' Model Based Tracking

Oliver Moolan-Feroze and Andrew Calway

Presented with an incomplete view of an object, a human is often able to make predictions about the structure of the parts of the object that are not currently visible. This allows us to safely navigate around large objects and enables us to predict the effects of various manipulations on smaller objects. In this work, we look at the problem of out- of-view prediction in the context of model-based tracking, where the camera pose (location and orientation) is estimated from incomplete views of a known object. In a typical model- based tracker, features are extracted from an image in the form of points, lines, or other higher level cues. By matching these features to a representation of the tracked model, a estimate of the camera pose can be computed. Key to this process is that a sufficient number of features are extracted so as to be able to get a robust pose estimate. When only a partial view of the object is available, the number of visible features is reduced and consequently tracking performance is affected. By predicting out-of-view, we expand the set of possible correspondences, and increase the robustness of the tracking.

![]()

The approach we take is to learn the relationship between a partial view of an object and the configuration of salient feature points, some of which may be out of view. An example is shown above. On the left is a partial view of a chair and in the centre is the result of our algorithm which predicts the likely location of key feature points, indicated by different coloured likelihood densities. This includes those within view, of which the algorithm is more certain about, but also of those outside, which although are considered to be less certain, they still provide a good indication of the shape of the chair. As such they allow robust tracking of the chair using a known skeleton model defined amongst the feature p;oints as shown on the right.

![]()

We use a CNN to learn the relationship between partial views and feature point configurations. Specifically, we train the network to produce a 'heat map' of the likely locations of feature points (representing likelihood densities) such as that shown above. To train, we generated a training set by hand labeling salient points on images which contained a full view of the object, such as the chair in the above example, but from a variety of viewpoints. We then generate sub-image partial views from each image, and each of these are linked to the full map of salient point positions as the required network output (how we do this is somewhat more complicated and is a key component of the work - for details see the paper cited below). These sub-images and position maps form the training set, augmented with rotated, translated and scaled versions. An example output from a trained network is shown above.

Publications

Results

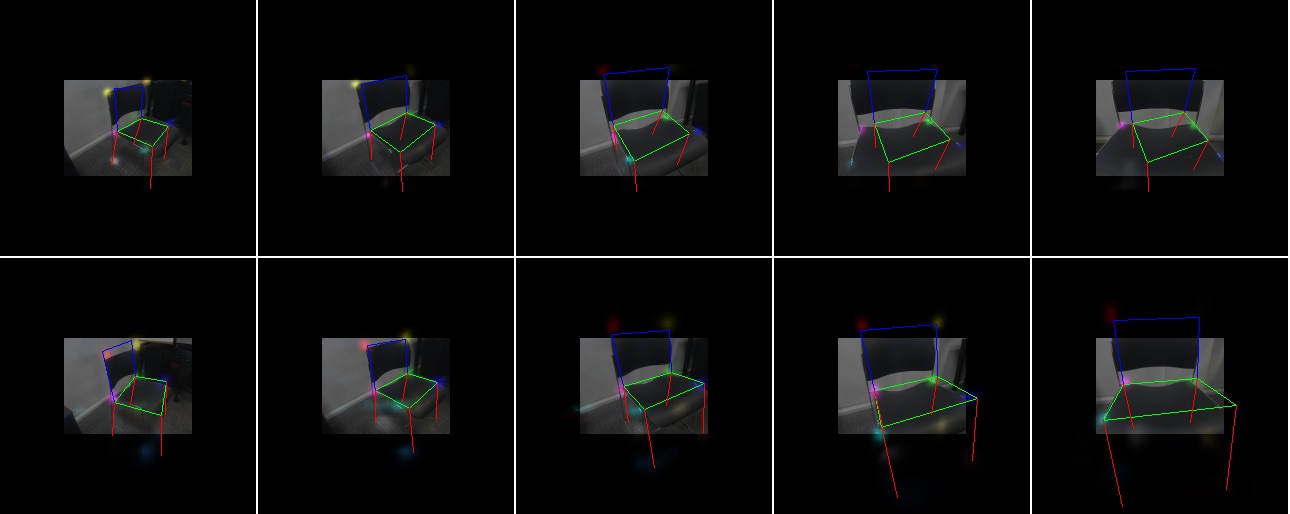

We tested the approach by incorprating the in-view and out-of-view feature prediction within two standard model-based tracking frameworks to give real-time estimates of 3-D camera pose. We experimented with both particle filtering and optimisation-based tracking approaches. Full details can be found in the above papers. Examples results are shown below.

Top: model-based tracking using only in-view feature points - note loss of track in multiple frames. Bottom: tracking using both in-view and predicted out-of-view features, giving significant imprivement in pose tracking.