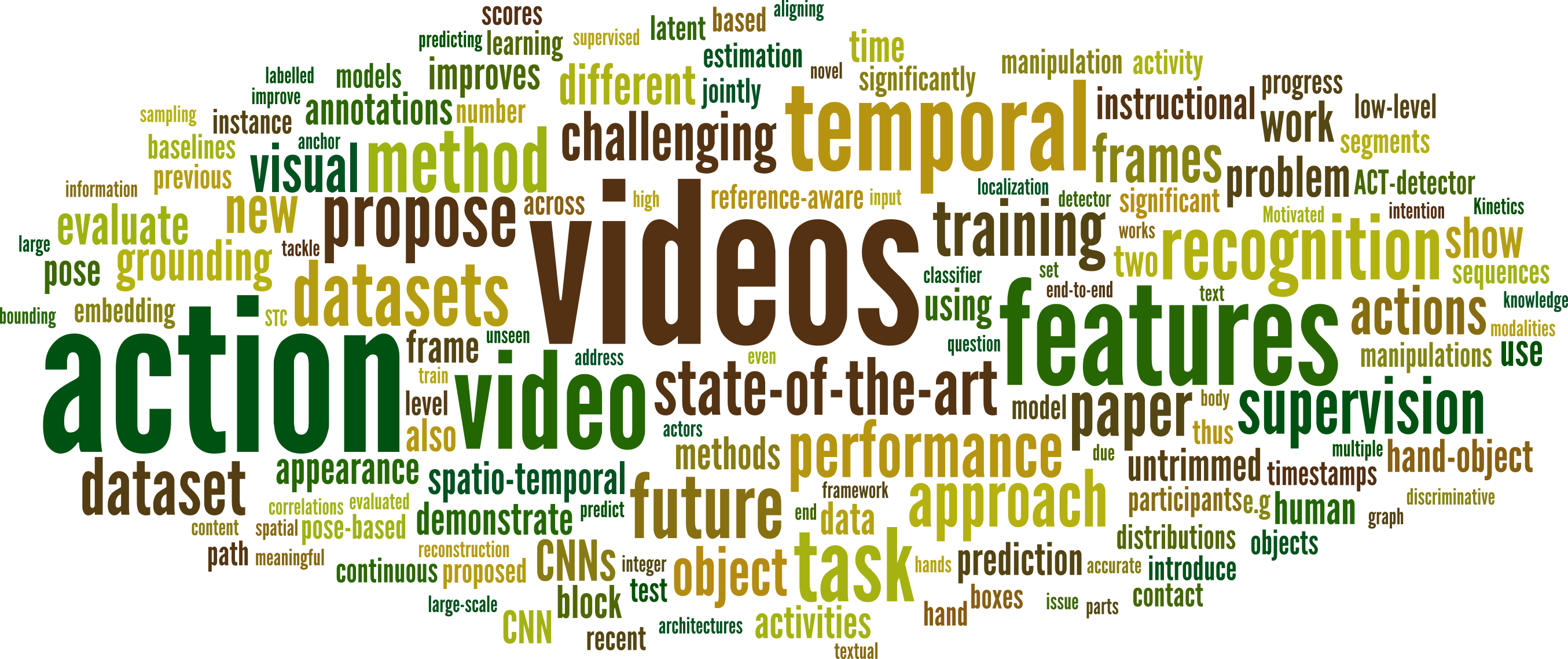

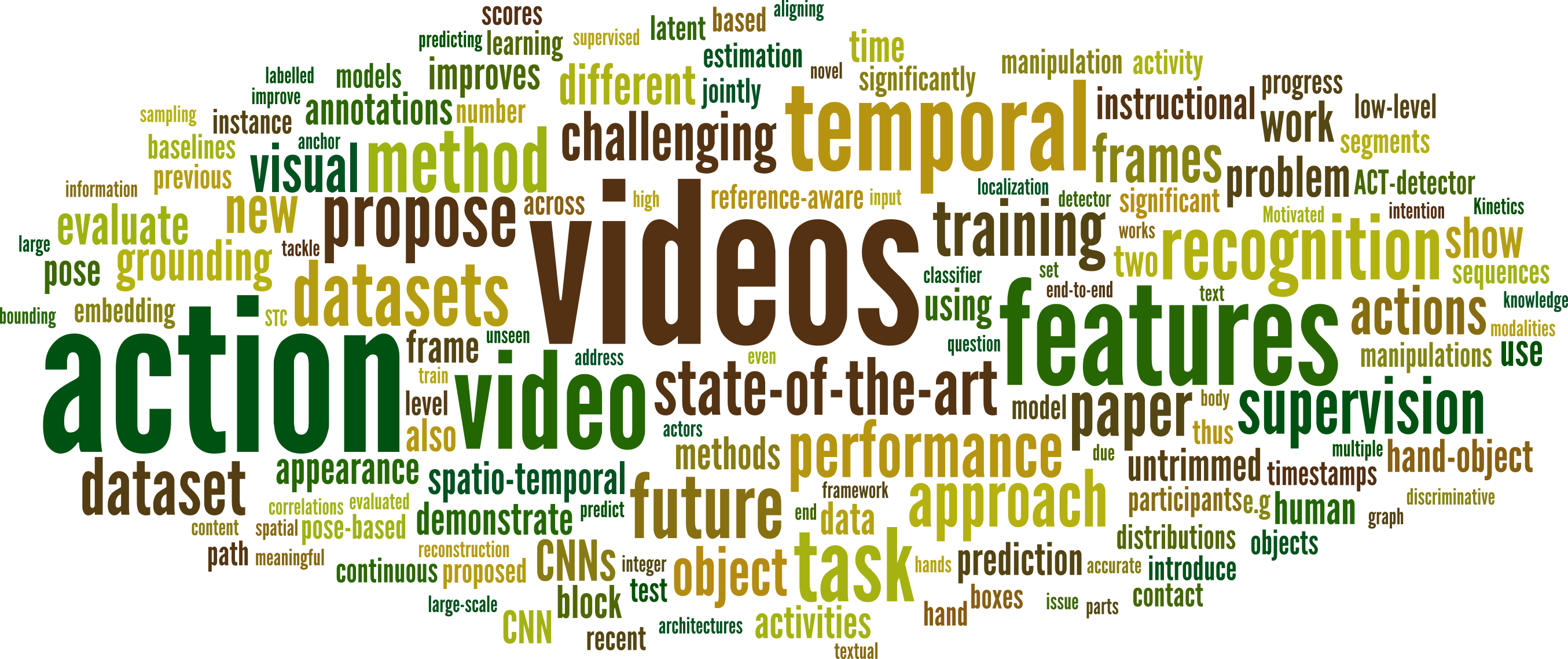

New models for video understanding remain a bottleneck for research despite the increase in the number of large-scale video datasets. New models for video understanding remain a bottleneck for research despite the increase in the number of large-scale video datasets. This symposium brings together 18 leading researchers who have contributed significantly to video understanding, collectively participated in the collection of datasets such as: UCF, HMDB, THUMOS, Hollywood, Hollywood2, Kinetics, Charades, ActivityNet, YouTube8M, YouCook, Breakfast, EPIC-Kitchens, DALY and AVA, amongst others! With a star-studded list of speakers, we aim to ask the question, what is missing in video understanding to catch up with the success witnessed in object detection or language translation?

The symposium’s objective is to share the latest research, but also feedback to the community about the major challenges, and plan for an ongoing collaboration on video understanding beyond the simplified concept of classification of trimmed videos.

The BMVA symposium on Video Understanding will be held in Central London, at the British Computer Society on Wednesday 25th of September 2019. The event will bring together 150 students, faculty, and research scientists for an opportunity to exchange ideas and connect over a mutual interest in video understanding.

Sponsored by: British Machine Vision Association (BMVA) and IBM Research

| 8:50-9:00 | Welcome and Introduction | ||

| 9:00-9:45 | Keynote: Jeff Zacks | Washington University in St. Louis | |

| 9:45-10:05 | Rahul Sukthankar | Google, CMU | |

| 10:05-10:25 | Cees Snoek | University of Amsterdam | |

| 10:25-10:45 | Mubarak Shah | University of Central Florida | |

| 10:45-11:10 | Coffee Break | ||

| 11:10-11:30 | Cordelia Schmid | INRIA | |

| 11:30-11:50 | Dima Damen | University of Bristol | |

| 11:50-12:10 | Juan Carlos Niebles | Stanford University - Toyota | |

| 12:10-12:30 | Du Tran | ||

| 12:30-12:50 | Jason Corso | University of Michigan | |

| 12:50-14:00 | Lunch and Poster Session | ||

| 1 | Recognizing Unseen Actions from Objects | Pascal Mettes | University of Amsterdam |

| 2 | RVOS: End-to-End Recurrent Network for Video Object Segmentation | Xavier Giro-i-Nieto | UPC Barcelona |

| 3 | ARCHANGEL: Tamper-proofing Video Archives using Temporal Content Hashes on the Blockchain | Tu Bui | CVSSP - University of Surrey |

| 4 | Use What You Have: Video retrieval using representations from collaborative experts | Samuel Albanie | Visual Geometry Group, University of Oxford |

| 5 | The Three-dimensional Extended Activity Multi-camera (TEAM) Dataset: A Realistic Structured Set of Human Action Sequences | Matthew Bezdek | Washington University in St. Louis |

| 6 | Self-supervised Learning for Video Correspondence Flow | Weidi Xie | University of Oxford |

| 7 | Grounded Video Description | Luowei Zhou | University of Michigan |

| 8 | Action Recognition from Single Timestamp Supervision in Untrimmed Videos | Davide Moltisanti | University of Bristol |

| 9 | TEMPORAL SALIENCE BASED HUMAN ACTION RECOGNITION | Salah Al-Obaidi | University of Sheffield |

| 10 | Action Completion: A Temporal Model for Moment Detection | Farnoosh Heidarivincheh | University of Bristol |

| 11 | Timeception for Complex Action Recognition | Noureldien Hussein | University of Amsterdam |

| 12 | Fine-Grained Action Retrieval through Multiple Parts-of-Speech Embeddings | Michael Wray | University of Bristol |

| 13 | |||

| 14 | The Pros and Cons: Rank-aware Temporal Attention for Skill Determination in Long Videos | Hazel Doughty | University of Bristol |

| 15 | Holistic Large Scale Video Understanding | Mohsen Fayyaz | University of Bonn |

| 16 | Learning object detection without labeling from video footage with transcript | Rami Ben-Ari | IBM Research-AI |

| 17 | Weakly Supervised Action Segmentation Using Mutual Consistency | Yaser Souri | University of Bonn |

| 18 | Temporal Binding Network (TBN): Audio-Visual Temporal Binding for Egocentric Action Recognition | Evangelos Kazakos | University of Bristol |

| 19 | Two-in-one Stream Action Detection | Jiaojiao Zhao | University of Amsterdam |

| 20 | Benchmarking action recognition models on EPIC-Kitchens | Will Price | University of Bristol |

| 21 | Detection of Human-Object Interactions in Video Streams | Lilli Bruckschen | University of Bonn |

| 22 | Multi-Modal Domain Adaptation for Fine-grained Action Recognition | Jonathan Munro | University of Bristol |

| 23 | Unsupervised learning of action classes with continuous temporal embedding | Anna Kukleva | Inria, University of Bonn |

| 24 | Advances Visual Sequence Learning for Action Recognition via 3D-CNN and GRU Architectures | Aishah alsehaim | Durham University |

| 25 | Audio-visual explanations for activity recognition using discriminitive relevance | Harri Taylor | Cardiff University |

| 26 | Explaining Temporal Information in Activity Recognition for Situational Understanding | Liam Hiley | Cardiff University |

| 27 | Context-aware Mouse Behaviour Recognition using Hidden Markov Models | Zheheng Jiang | University of Leicester |

| 14:00-14:20 | Andrew Zisserman | University of Oxford | |

| 14:20-14:40 | Lorenzo Torresani | Dartmouth College, Facebook | |

| 14:40-15:00 | Hilde Kuehne | MIT-IBM Watson Lab | |

| 15:00-15:20 | Ivan Laptev | INRIA | |

| 15:20-15:40 | Nazli Ikizler-Cinbis | Hacettepe University, Ankara | |

| 15:40-16:20 | Coffee Break | ||

| 16:20-16:40 | Jan van Gemert | Delft University of Technology | |

| 16:40-17:00 | Juergen Gall | University of Bonn | |

| 17:00-17:20 | Angela Yao | Singapore University | |

| 17:20-17:40 | Efstratios Gavves | University of Amsterdam | |

| 17:40-18:00 | Discussion and Concluding Remarks | ||

Register through the BMVA website by following this link.